The Managers' Guide № 129

AI gains are modest. Slow feedback loops cost you a full engineer. You already know the hard call. And the best work doesn't feel like grinding. Research-backed insights on what actually drives engineering productivity.

Shoutout to my hair stylist for referring to my mass of grey hairs as my "natural highlights."

Alice McFlurry

Applying AI where it matters

- 🧠 Task perception drives AI adoption: Developers' feelings about tasks strongly influence their openness to AI help. For important, high-stakes, or difficult work, they welcome AI as a safety net — but still want to stay in control. For tasks tied to their professional identity, they're more reluctant to hand over the reins.

- 🤝 "People work" stays human: Developers firmly limit AI involvement in interpersonal tasks like mentoring, relationship-building, and crafting AI features themselves. These require trust, empathy, and judgment — skills they believe AI can't replicate. AI is only welcome "at the margins" here.

- 🔧 Operational tasks are prime AI territory: For repetitive, tedious work — maintaining systems, setting up environments, updating docs — developers strongly want AI support. The catch: it must be safe, reliable, and easy to supervise. No autonomous risky changes allowed.

- 📊 A quadrant map for prioritization: The researchers created a practical framework plotting "need for AI" vs. "current AI use." Build (high need, low use) = opportunity zones. Improve (high need, high use) = focus on reliability. Sustain (low need, high use) = maintain, don't over-invest. De-prioritize (low need, low use) = keep human-led.

- 🔒 Safety and transparency are non-negotiable: Across the board, developers want AI that's reliable, protects sensitive data, explains itself, and is easy to override — especially for high-stakes technical work where mistakes create real risk.

- 👤 Personal traits matter: Junior developers and those with more AI experience use it more often. Risk-tolerant, AI-enthusiastic individuals lean on it heavily for demanding work, while cautious developers remain careful when stakes are high.

You Know What To Do

- 🎯 You already know the answer: Smart people with context on a tricky situation almost always know exactly what they need to do. staysaasy Whether it's firing an exec, doing a layoff, cutting a product, or shutting down a business — if you're asking the question, you probably already know the answer deep down.

- 😰 Avoidance creates more pain: People hate confronting difficult decisions, so they develop coping mechanisms to dodge conflict. The irony is they end up accumulating far more aggregate pain than if they'd just bitten the bullet early.

- 🚨 Watch for the word "just": It's a telltale sign of an excuse disguised as an argument. Phrases like "we just need another quarter" or "let's just give it a few more weeks" are red flags that you're rationalizing avoidance.

- 😣 Lean into discomfort: When torn between choices, the path that makes you most uncomfortable is almost always the right one. Human tendency is to avoid hard decisions, so your errors will only go in one direction — toward avoidance.

- 👂 Favor proximity over title: People closest to a problem know what to do. As organizations grow, the comprehension gap between on-the-ground experts and distant leadership widens dramatically. Seek feedback from those with direct context, not just seniority.

- 📊 Data can be a trap: The cult of being "data-driven" trains teams to look for subtle nuances that don't exist 99% of the time. It favors certainty over speed. If you're an experienced leader with clarity, don't let the siren call of more data gaslight you into analysis paralysis.

- ⚡ You owe your team decisiveness: Leaders often freeze because they don't want to be blamed for wrong calls. But your team joined because they trust your judgment. They'll respect decisive action more than a theoretically higher hit-rate achieved through endless deliberation.

The Hidden Cost of Slow Feedback Loops

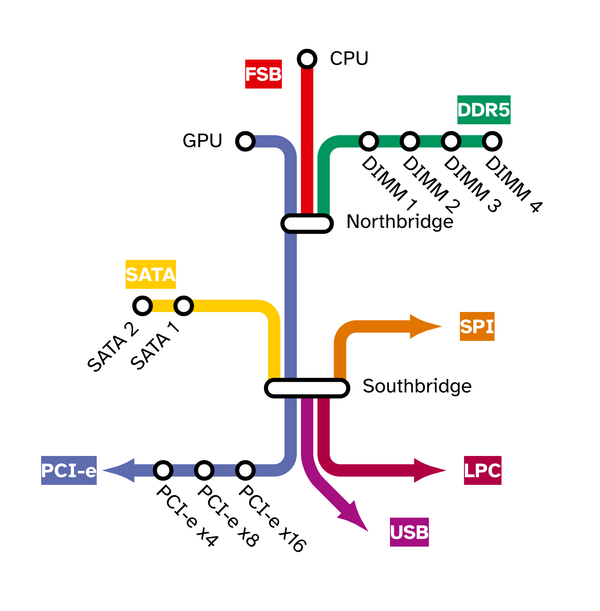

- 🚗 The car analogy: Would you tow a car to 100 km/h on a highway just to test if the engine starts? Of course not. Yet teams constantly say "we need to deploy this to staging to verify it works" for simple changes. Your application shouldn't need a fully-fledged environment to verify basic behavior.

- ⏱️ The ideal feedback hierarchy: Local testing should be fastest (rebuild and test independently), staging second (more steps required), and production slowest. But in reality, many teams either have no local environment at all, or it's actually slower than deploying to staging — an entirely self-inflicted problem.

- 🐸 The boiling frog effect: Projects don't start broken. At the beginning of every software project, the amount of dependencies is zero. revontulet With each new dependency and rushed feature, the feedback loop degrades slightly. Since you're part of the process, you don't notice — until it becomes unbearably painful.

- 📉 The hidden math — losing a full engineer: If 8 engineers each spend 10 extra minutes per verification attempt, 6 times per day, that equals 8 hours daily — the capacity of one full-time engineer. You're paying for 8 but getting the output of 7.

- 🎯 Where are the targets? We set thresholds for code coverage and won't accept changes that breach them. But we don't set targets for "how long verification should take." If we did, engineers would naturally invest in keeping feedback loops healthy.

- 💸 Cumulative debt catches up: Skipping feedback loop maintenance looks like a short-term velocity win. But there's a turning point where accumulated slowdown overtakes any time "saved" — and from there, it's net-negative until addressed.

- 🛠️ Real example: The author joined a team that needed 20–30 minutes to deploy to staging just to verify a simple GET endpoint. After building a local setup, verification dropped to under a minute. Stop adding features and fix local verifiability — it's worth it.

What if hard work felt easier?

- 🔄 Ease and alignment aren't indulgent — they're effective: When you stop trying to lead like someone else — and instead lean into your natural way of working — everything gets easier. And the results are often better, too. Substack The assumption that work must feel draining to be valuable might be completely backwards.

- 🪑 Butts in seats ≠ results: The author describes sitting at her desk tired, clicking around, skimming articles, answering Slack — two hours of "work" with nothing accomplished. A walk, nap, or phone call would have been more productive. She shipped a full Community Library app in two weeks because it felt obvious to build.

- 📚 Learning happens naturally when you care: After having a baby, the author felt behind on AI and engineering trends. Tutorials and tips sat unopened. Then she built a silly Trader Joe's snack box app — and suddenly started learning Claude Code, auth flows, and email automations without effort. Learning to bring an idea to life felt easy; forcing herself to "sit down and learn" never worked.

- 🔥 "Hard work" often doesn't feel hard in hindsight: The author stayed up coding until 3am in college, built companies, worked at early-stage startups — objectively intense. But it never felt like grinding because she loved what she was doing and chose environments aligned with her interests.

- 👔 For leaders — don't mandate effort, find alignment: The most effective way to get output isn't tracking hours. It's helping people find work that feels obvious to them — tapping into their internal motivation and aligning team needs with what they actually want. That's where real momentum lives.

- ❓ Questions to find your ease: When has work felt surprisingly energizing? What would you do even if no one asked? Is there a "should" that's been sitting on your list for months — do you actually need to do it, or can you let it go?

- ⚡ The grind culture myth: Tech is glorifying long hours and butts-in-seats more than ever. There's an implicit assumption that if work doesn't feel difficult, you're not trying hard enough. But the work that flows easily — the things you'd do unprompted — might be where your real leverage lives.

How has AI impacted engineering leadership in 2025?

- 📊 Productivity gains are modest — so far: 60% of engineering leaders said AI has not significantly boosted team productivity. Substack Only 6% saw gains above 30%, while 21% reported no benefit or even a decline. The transformative impact hasn't materialized yet.

- 👥 Job displacement fears are overblown (for now): 54% of respondents said AI will not impact headcount in 2025. Despite media narratives, most leaders don't expect AI to reduce team sizes this year.

- 💻 Coding assistants dominate use cases: 47% use AI for code generation, 45% for refactoring, and 44% for documentation. These practical, hands-on applications are where teams are finding the most traction.

- ⚠️ Leaders are worried about the long-term: 51% believe AI will have a negative long-term impact on the industry. Top concerns include code maintainability (49%) and the impact on junior developers entering the field (54%) — a potential gap in how new engineers learn and grow.

- 🔧 AI tooling is the top investment priority: Engineering leaders continue to evaluate and switch AI suppliers as the landscape evolves rapidly. Measuring and improving overall engineering performance is a growing focus area.

- 🎯 Treat AI as organizational change, not just tooling: Adoption succeeds when teams have clear guidance, psychological safety to experiment, and shared expectations. It's a leadership challenge around change management, team enablement, and long-term quality — not just picking the right tool.

- 🧪 Create space for continuous learning: With AI evolving rapidly, engineers need regular opportunities to experiment and build new skills. Encouraging structured exploration helps teams adapt as workflows shift.

That’s all for this week’s edition

I hope you liked it, and you’ve learned something — if you did, don’t forget to give a thumbs-up, add your thoughts as comments, and share this issue with your friends and network.

See you all next week 👋

Oh, and if someone forwarded this email to you, sign up if you found it useful 👇