The Managers' Guide № 130

Discover essential strategies for modern software teams: learn how to survive the AI career shift, why writing code is often your least productive activity, and how to transform daily standups from status reports into empathy-building sessions.

It's weird how in the end “a penny for your thoughts” was actually more than AI companies were willing to pay

Mike Sheward

How I Provide Technical Clarity To Non-Technical Leaders

- 🎯 Core Mission: A staff engineer's highest-leverage work is providing technical clarity — helping non-technical decision makers understand what changes are possible within software systems

- 🧠 The Reality Gap: Even senior engineers often can't definitively answer basic questions about their own systems without "going to check" — software complexity makes complete understanding nearly impossible

- ❓ Key Questions Leaders Need Answered: Can features be safely delivered? Can changes be rolled back? Can rollouts be gradual? These require deep system understanding and careful code review to answer properly

- 🤝 The Technical Advisor Role: Staff engineers become unofficial advisors to VPs and directors, serving as an "abstraction layer" around technical complexity — much like how garbage collection abstracts memory management

- ⚖️ Balancing Confidence and Uncertainty: Engineers must project 95% confidence while internally managing 5% worry about "unknown unknowns" — the risks they haven't even thought to consider

- 💬 Communication Strategy: Saying "I think we can roll back safely, but I can't be sure because..." is less helpful than a clear "yes" or "no" — leaders need actionable answers, not technical anxiety

- 🎨 Three Requirements for Success: Good taste (knowing what to mention/omit), deep technical understanding through active coding, and confidence to present simplified views despite uncertainty

- 📈 Career Impact: Engineers who provide technical clarity often get promoted faster and are positioned on more important projects because they solve a high-value organizational problem

- 🚫 Not About Dishonesty: Simplifying complex technical realities for decision makers isn't lying — it's providing the right level of detail for effective decision-making

Software Survival 3.0

- 🌪 The Third Great Filter: Yegge identifies the current AI revolution as the third major “extinction event” for software engineers, following the Dotcom crash and the 2008 recession. He argues that — “This time is different” — because AI isn't just a productivity tool; it is fundamentally replacing human cognition in coding tasks, creating a structural shift rather than a temporary market cycle.

- 💀 The End of the Junior Engineer: The traditional mentorship model is collapsing. Since modern LLMs can perform at the level of a junior-to-mid-level developer instantly and almost for free, companies have lost the economic incentive to hire beginners and train them. The bottom rungs of the career ladder have essentially been sawed off.

- 🛠 Value Shifts to Generalists: Deep specialization is becoming a liability. To survive, engineers must pivot from being “coders” to being “product builders.” Yegge emphasizes that because AI handles the implementation details, the human value add is now in understanding the full stack, the business logic, and the user experience — “You are no longer a coder. You are an architect of AI agents.”

- 🚀 Force Multiplication is Mandatory: Ignoring LLMs is professional suicide. The only way to stay relevant is to utilize AI tools to increase your personal output by 10x or 100x. Engineers must learn to manage code generation rather than writing syntax manually, effectively moving up a level of abstraction.

- 📉 The Bloat is Over: The era of massive engineering teams doing very little work is finished. Due to high interest rates and AI efficiency, companies are realizing they can achieve more with significantly fewer people. Yegge warns that — “The herd is being thinned” — and the market will likely never return to the hiring frenzies of the past decade.

Coding Is When We’re Least Productive

❌ Productivity is not volume: The author attacks the “old dragon” resurfacing in the age of AI — the mistaken belief that productivity equals code output. Managers often push to maximize the time developers spend typing, assuming more lines of code equal more value, but this mindset creates a dangerous disconnect between activity and actual utility.

🏪 Go to where the problem is: In a story about a Point of Sale upgrade, the author explains how leaving the office to observe real users prevented a disaster. By visiting a store and testing scenarios on a real machine (a “model office”), the “mist cleared” — providing context and understanding that no specification document could match.

📉 Efficiency vs. Effectiveness: After the store visit, the author wrote only three lines of code in eight hours. While traditional metrics (like commit counts) would classify this as an unproductive day, those specific lines saved the client from a costly error across 250 branches nationwide — proving that “some code is worth more than others.”

🛑 Coding can be the interruption: The piece argues that when developers are heads-down coding, they are blind to the actual problem. Coding prevents asking questions and validating ideas. As the author notes, there is no IDE shortcut that tells you — “you’re writing the wrong code.”

🔄 The feedback loop is the work: True productivity is measured by net value created, which comes from the “learning feedback loop” rather than punching keys. Producing code faster than you can validate it just means — “piling assumptions on top of assumptions.”

The way I run standup meetings

- 🚫 Not a status report: The author strictly differentiates this meeting from a “daily scrum” or a status update. The goal is not to move tickets on a board or hold people accountable, but to share “interesting things” and blockers. Consequently, it is perfectly acceptable — and even encouraged — to share nothing on days where you have no relevant updates.

- 🤝 Empathy is the ROI: The primary benefit of the meeting is building empathy and cohesion rather than tracking tasks. By hearing a developer explain their struggles verbally, teammates gain context for future code reviews or understand why someone might be stressed. The author argues that — “I’m yet to be convinced that reading a Slack message has the same impact than seeing someone share the same information verbally.”

- 🧠 Escaping the silo: The post addresses the common complaint of “I’m a designer/dev, I don’t care about the other side.” The author argues this is a career-limiting mindset. Engineers who listen to design updates identify shortcuts and implementation hurdles early, while designers who understand engineering constraints get more of their work actually built.

- 🤐 Permission to remain silent: If a team member has nothing to say, they can simply state — “nothing really interesting since last time.” However, the author notes that if someone is silent for many days in a row, it acts as a signal for a deeper problem, such as being stuck, feeling unsafe to share, or doing work they feel isn't valuable.

- ⏰ Strategic timing: The meetings are held at 12:03 PM, right before lunch. This serves two purposes: it creates a natural hard stop to keep the meeting short (10–15 mins), and for co-located teams, it acts as a low-effort team-building activity as everyone heads to lunch together afterward.

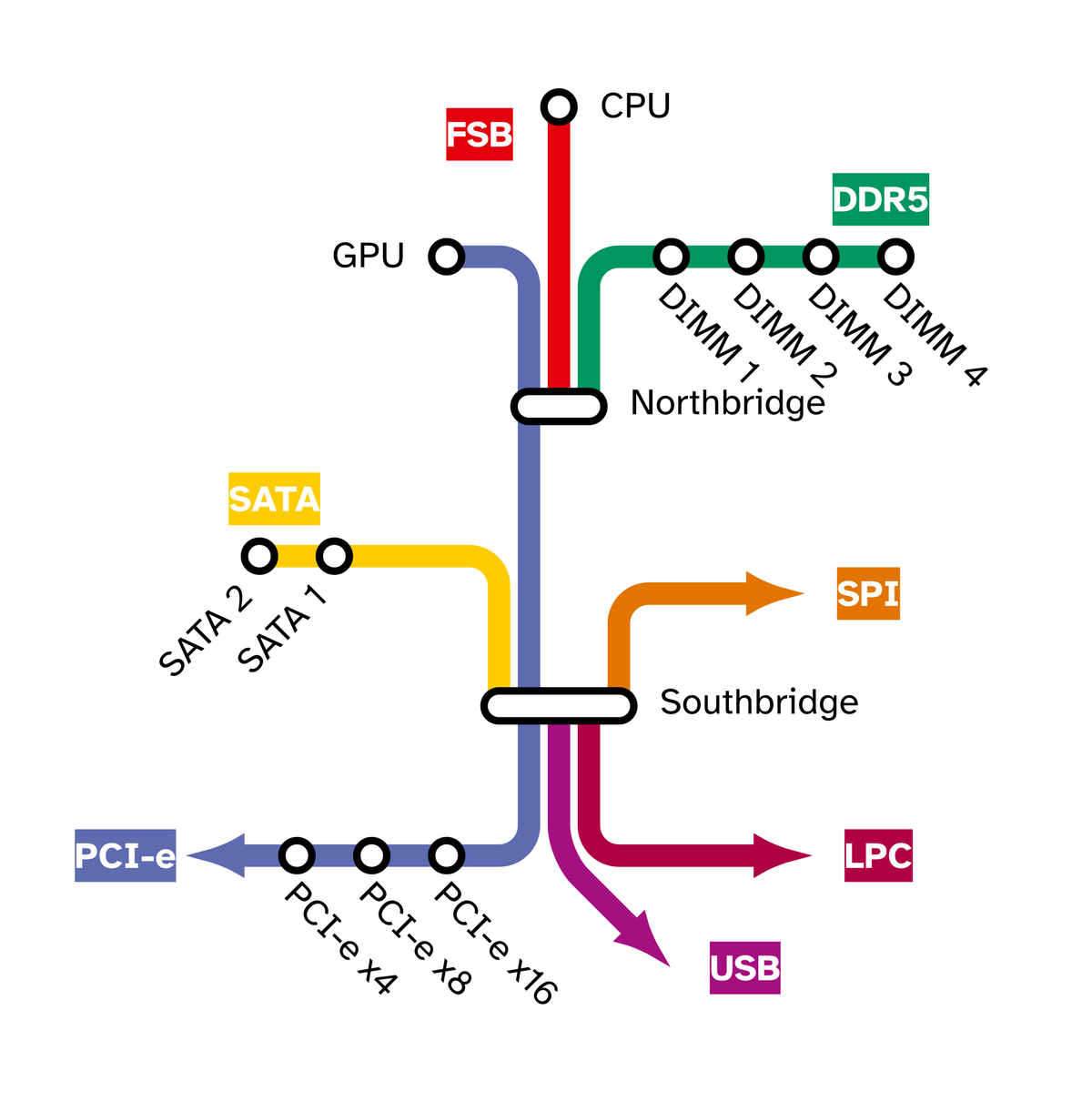

Agentic AI and Security

- 🔓 Core vulnerability — LLMs fundamentally cannot distinguish between instructions and data, treating everything they read as potentially executable commands

- ⚡ The Lethal Trifecta — Maximum risk occurs when systems have: sensitive data access, untrusted content exposure, and external communication abilities all at once

- 🎯 Prompt injection attacks — Attackers can embed hidden instructions in seemingly innocent content (like Jira tickets or web images) that LLMs will follow

- 🛡️ Primary defense strategy — Eliminate at least one element of the trifecta: limit sensitive data access, block external communication, or restrict untrusted content

- 📁 Credential protection — Never store production credentials in files; use environment variables and tools like 1Password CLI to keep secrets only in memory

- 🌐 Browser extensions are dangerous — LLM-powered browser tools with access to your real browser sessions create "fatally flawed" security risks

- 📦 Sandboxing is essential — Run LLM applications in controlled containers to limit what they can access and execute

- 🔄 Task segmentation — Break complex workflows into smaller, human-reviewable steps where each sub-task blocks at least one trifecta element

- 👁️ Human oversight required — Keep humans in the loop for reviewing and controlling AI actions, especially for sensitive operations

- ⚠️ Industry-wide problem — As Bruce Schneier notes, there are currently "zero agentic AI systems that are secure against these attacks" — it's an existential challenge the industry is largely ignoring

That’s all for this week’s edition

I hope you liked it, and you’ve learned something — if you did, don’t forget to give a thumbs-up, add your thoughts as comments, and share this issue with your friends and network.

See you all next week 👋

Oh, and if someone forwarded this email to you, sign up if you found it useful 👇